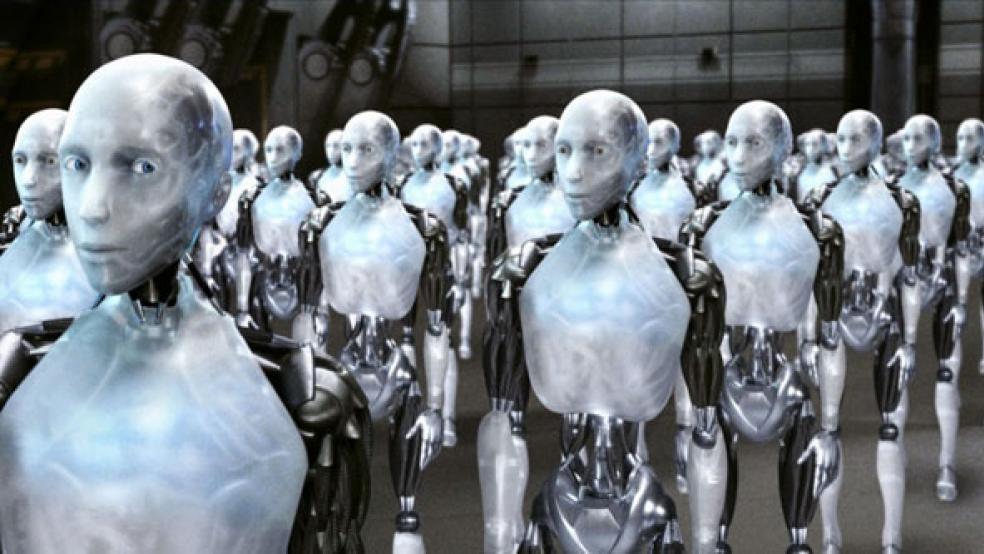

As the Pentagon expands its use of robots on the battlefield and its investments in developing robot technology, a movement to ban the use of autonomous robots on the battlefield is growing. Those who decry the use of robots argue that removing the human element from warfare would remove all moral judgment; robot soldiers would be unfeeling killing machines.

One researcher, however, believes just the opposite. He argues that robot soldiers would make warfare more ethical, not less.

Ronald Arkin, an artificial intelligence expert from Georgia Tech and author of the book, Governing Lethal Behavior in Autonomous Robots, argues in a series of papers that robots can be taught to act morally. He’s presenting his ideas at a United Nations meeting in Geneva this week and sent a 2013 paper, “Lethal Autonomous Systems and the Plight of the Non-combatant,” to outline his views.

Related: We’re One Step Closer to Robots on the Battlefield

Arkin says, “It may be possible to ultimately create intelligent autonomous robotic military systems that are capable of reducing civilian casualties and property damage when compared to the performance of human warfighters.”

In the paper, Arkin argues that it’s the very inhumanity of robots that allow them to make more humane decisions than their human counterparts. For instance, robots could reduce friendly fire incidents and lower civilian casualties. They could also be programmed to act in what humans would consider a moral way in situations where a human soldier might be tempted to violate the laws of war or ethical and moral codes. He argues that history proves that it’s impossible to prevent soldiers from violating these laws and codes.

“While I have the utmost respect for our young men and women warfighters, they are placed into conditions in modern warfare under which no human being was ever designed to function,” he writes. “In such a context, expecting a strict adherence to the Laws of War … seems unreasonable and unattainable by a significant number of soldiers.”

Advantages Over Humans

Arkin claims that robots provide an advantage over humans for a host of reasons, including:

- They do not have to worry about self-preservation, and therefore would not have to fire upon targets they simply suspect pose a threat. “There is no need for a ‘shoot first, ask-questions later’ approach, but rather a ‘first-do-no-harm’ strategy can be utilized instead. They can truly assume risk on behalf of the noncombatant,” he writes.

Related: Robots to Replace Troops on the Battlefield

- They have sensors that are better equipped than a human being to survey the battlefield that allow them to see through the so-called fog of war.

- They could be designed in a way that prevents them from acting out of anger or frustration.

- Physical and mental damage from actions of the battlefield would have no impact on a robot.

- They can process more information than a human before having to use deadly force.

- They could independently monitor the ethical behavior of humans that fight along side it. “This presence alone might possibly lead to a reduction in human ethical infractions,” Arkin argues.

Related: Killer Robots: If No One Pulls the Trigger, Who’s to Blame?

Arkin’s thesis comes at a time when the military is expanding its use of robots on all fronts. They are already used on the battlefield to detect roadside bombs. Private companies and laboratories are also developing robots that can fight fires, haul gear and drag soldiers to safety. It’s only a matter of time before one is weaponized.

And it appears as if the military is buying into Arkin’s argument. The Office of Naval Research will give a $7.5 million grant to Tufts, Rensselaer Polytechnic Institute, Yale, Georgetown and Brown researchers to develop a robotic system that can determine right and wrong.

In his research, Arkin deals only with the moral questions surrounding the use of robots. He does not address the financial issues connected to the job losses that would follow the use of robot soldiers. In theory, they could make human infantry redundant, eliminating hundreds of thousands of jobs for traditional soldiers.

Obligation to Use Them?

Arkin argues that if science can create weaponized robots that are programmed to always do the right thing under rules of war and recognized moral code, there is an obligation for war planners to use them.

“If achievable, this would result in a reduction in collateral damage, i.e., noncombatant casualties and damage to civilian property, which translates into saving innocent lives. If achievable this could result in a moral requirement necessitating the use of these systems,” he writes.

Top Reads from The Fiscal Times